Table of Contents

- Key Takeaways

- The Philosophy Behind CES

- What Is Customer Effort Score?

- When Is the Right Time to Send a CES Survey?

- How to Put Together a Good Customer Effort Score Question

- How to Interpret Customer Effort Score Results

- Customer Effort Score – The Good and the Bad

- Actionable Insights: What CES Scores Are Really Telling You

- Is Only Using Customer Effort Score Surveys Enough?

- Bottom line

Disloyal customers are costing businesses billions. But what actually triggers disloyalty? Former CEB Global’s research (now part of Gartner) explained that the level of effort consumers put into interacting with a brand directly impacts loyalty levels. In fact, according to CEB Global, around 96% of consumers who reported having difficulty solving a problem were more disloyal.

How do you know how easy it is for your clients to interact with your business, though? Well, that’s where the Customer Effort Score comes into play.

Curious about how CES can transform your customer experience strategy? Let’s dive in!

Key Takeaways

- Customer Effort Score measures how easy it is for customers to complete a task or resolve an issue, and low effort is proven to drive higher loyalty and reduce churn.

- CES helps businesses identify friction points in real-time, allowing them to address issues before they escalate and improve the overall customer experience.

- It can be customized to specific touchpoints, such as onboarding, checkout, or post-support interactions, making it a versatile tool for mapping customer effort across the journey.

- CES scores are only valuable if paired with actionable steps, whether through process improvements, better communication, or self-service tools.

The Philosophy Behind CES

Let’s get real for a moment: it doesn’t matter how amazing your product is if using it feels like a marathon with hurdles. The harsh truth is that customers will walk away – even from a brilliant product or service – if the experience is too complicated, frustrating, or time-consuming.

This is where the “ease breeds loyalty” principle is front and center. We’re wired to favor experiences that are smooth and effortless. Think about the last time you breezed through a self-checkout or got fast support. Chances are, you walked away feeling good about the interaction and the brand behind it.

Now flip that around. Ever had to call customer support five times to resolve a simple issue? Or wade through a confusing returns process? Even if the end result was fine, the effort you spent probably left a sour taste. This is the paradox of high-effort experiences: even the best products or outcomes can fail if getting there feels like too much work. Here’s where CES takes the floor.

What Is Customer Effort Score?

A general Customer Effort Score definition describes it as a type of customer survey that measures how easy it was for a client to interact with your business (solving an issue with customer support, making a purchase, signing up for a trial, etc.).

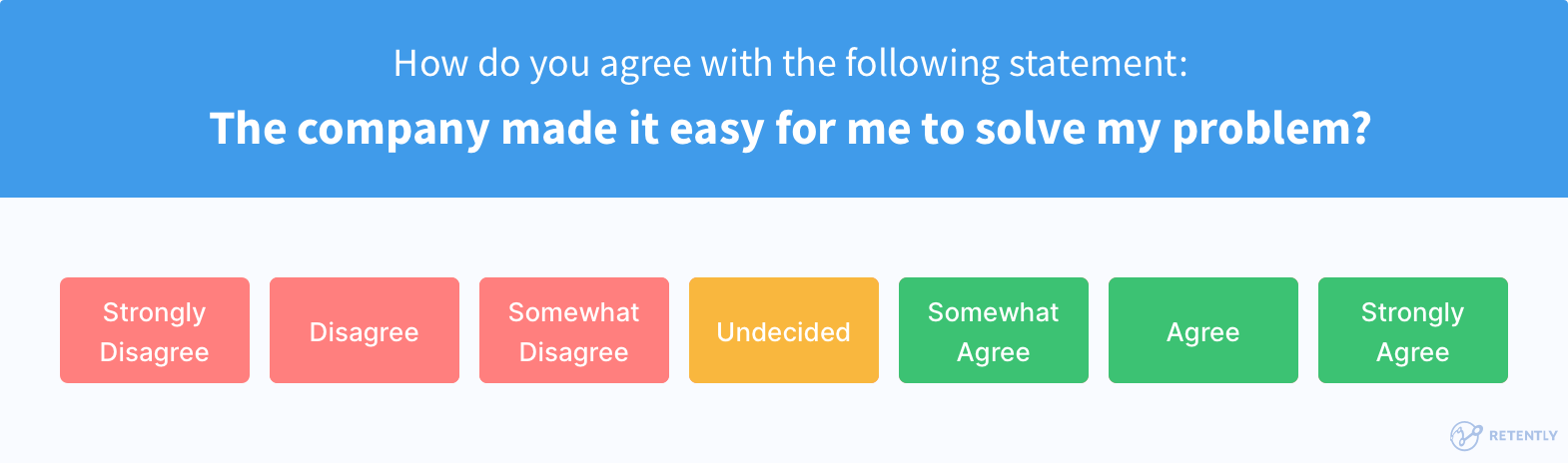

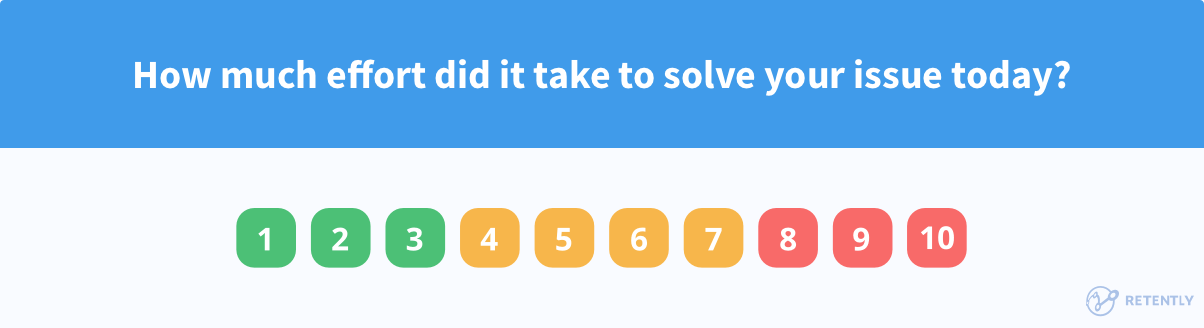

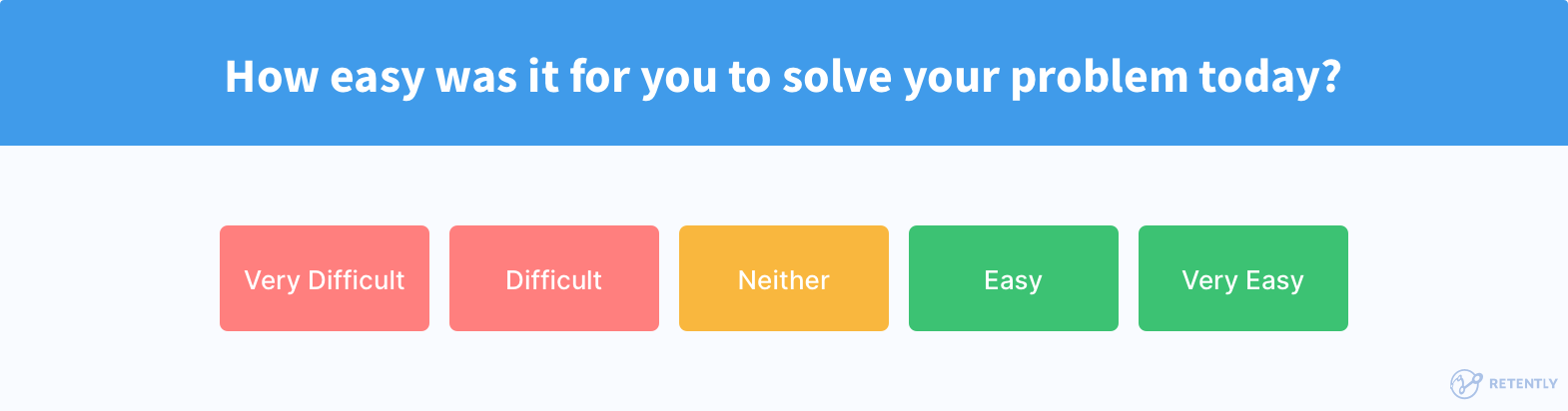

Consumers are generally asked how they agree with a statement (“The company made it easy for me to solve my problem”, for instance), to rate their level of effort, or just to answer a question (“How easy was it for you to solve your problem today?”, for example).

CES Survey Types

There are a few metrics you can use to measure your Customer Effort Score, but keep in mind that they can change the way you calculate and score surveys:

- The Likert scale – This method involves a “Strongly Disagree/Strongly Agree” scale structured as such: Strongly Disagree – Disagree – Somewhat Disagree – Undecided – Somewhat Agree – Agree – Strongly Agree. The answers are usually numbered 1 to 7, and you can also color code each one to make everything more visually intuitive for respondents (having “Strongly Agree” in green and “Strongly Disagree” in red, for instance).

- The 1-10 scale – This metric involves having respondents offer an answer to your question in the 1-10 range. Generally, the 7-10 segment is associated with positive responses (if you’re asking customers how easy it was to do something, for instance). However, if your question asks the respondent to rate the level of effort, the 1-3 segment will be associated with positive results instead (since they represent low effort).

- The 1-5 scale – In this case, the answer options are as follows: Very Difficult – Difficult – Neither – Easy – Very Easy, and they are numbered from 1 to 5. You can also reverse the order.

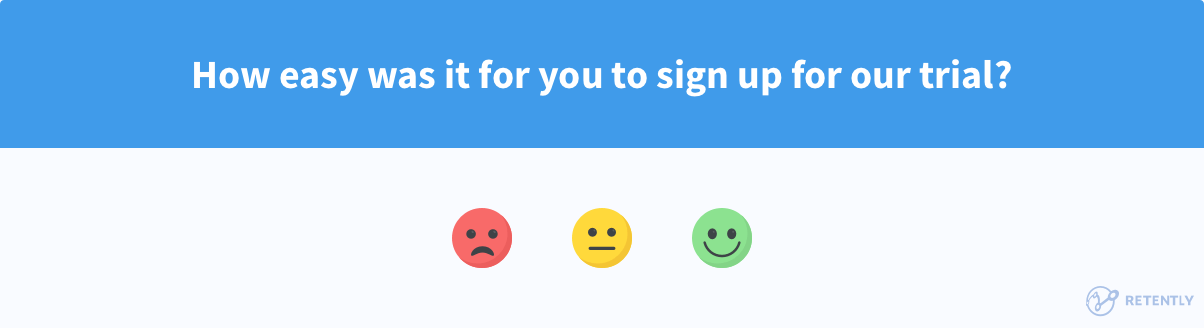

- Emotions Faces – While this metric is pretty simple, it’s useful if you run a lot of CES surveys for minor aspects of your product/service/website. Plus, it also makes it easy and intuitive for respondents to quickly answer. Basically, you use Happy Face, Neutral Face and Unhappy Face images as responses, with the Happy Face usually meaning there was little effort required.

When Is the Right Time to Send a CES Survey?

Generally, CES web surveys are sent to customers during these key moments:

After an Interaction That Led to a Purchase

Sending out Customer Effort Score surveys after a client interacts with your product/service or service team and ends up purchasing is a great way to collect real-time feedback about what improvements you need to make to streamline the buying experience.

For example, you should always send out a CES survey after a customer signs up for a free trial or finishes the onboarding period. That’s especially relevant since poor onboarding accounts for 23% of average customer churn. It helps you quickly determine if any adjustments are necessary to make others more likely to buy from you.

Where CES Fits In:

- Onboarding: Ask, “How easy was it to get started with [our product/service]?” to gauge how smoothly new users can complete the setup process.

- Sign-Up or Registration: Use CES to measure how seamless your sign-up process is. Questions like “How simple was it to create an account?” can help you identify if there’s unnecessary friction.

- Product Discovery: For ecommerce or in-store shopping, ask something like, “How easy was it to find/locate the product you were looking for?” to uncover whether customers are struggling to navigate your offerings.

They say first impressions last a lifetime, and this couldn’t be more true for customer experience. Whether it’s a first-time user navigating your app or a shopper exploring your website, the ease of these initial interactions can set the tone for your entire relationship.

Right After a Client’s Interaction with Customer Service

Sending out a CES survey after a customer service touchpoint (such as email support tickets) lets you quickly assess the efficiency of your support team and identify areas for improvement to boost overall performance. You should also consider sharing such a survey after a customer finishes reading a Knowledge base article, since it will help you find out how helpful your content is.

In this case, sending out CES questionnaires at a specific interval is unnecessary. Since the question asks respondents how much effort they had to put into solving a problem, it makes more sense to deploy the survey after customer service touchpoints.

Where CES Fits In:

- Support Follow-Up: After a live chat, email, or phone call, ask, “How smooth was your experience getting support via [phone/email]?” This gives you direct feedback on how your support team is performing.

- Self-Service Tools: For FAQs or knowledge bases, try, “How easy was it to find the answer you needed?” to measure the effectiveness of your resources.

Even the best brands can’t avoid occasional issues. What sets great businesses apart is how easy they make it to fix those problems.

After Any Interaction Surfacing Usability Experience

The CES survey can be sent out after any interaction that, in one way or another, could cause friction and result in a negative customer experience. This can be related to the launch of a new feature to follow up on its adoption and inquire about potential pain points, to learn more about the efficiency of your internal processes or the overall usability experience.

The question needs to revolve around that interaction and be triggered upon its completion to make recollections accurate and the received feedback actionable. With Retently you can now create survey questions tailored to specific events with just a click, so you are never short of ideas.

Where CES Fits In:

- Checkout and Payments – Ask, “How smooth was your checkout experience?” to uncover pain points like slow-loading pages, complicated forms, or unexpected fees – areas where customers may abandon their cart due to unnecessary friction.

- New Feature Adoption – After launching a new product feature, ask, “How easy was it to start using [new feature]?” to measure the learning curve.

- Subscription or Plan Changes – For subscription-based businesses, ask, “How easy was it to upgrade or modify your plan?” to ensure the process isn’t discouraging users from making changes.

Introducing the Concept of “Effort Hotspots”

Effort hotspots are those specific stages in the customer journey where friction tends to pile up. These are moments where customers are more likely to feel overwhelmed, frustrated, or stuck – and they’re prime candidates for CES measurement.

How CES identifies hotspots? By mapping CES scores across the customer journey, you can identify recurring low scores at specific touchpoints. For example, if your checkout process consistently receives low CES scores, it’s a clear sign to review and simplify it.

Hotspots can also vary by audience. A tech-savvy customer might find onboarding easy, while a less-experienced user may struggle – CES helps you uncover these differences.

Fixing effort hotspots is one of the fastest ways to improve overall customer satisfaction and loyalty. When you focus on reducing friction at these critical moments, you’re creating a smoother, more enjoyable experience that keeps customers coming back.

How to Put Together a Good Customer Effort Score Question

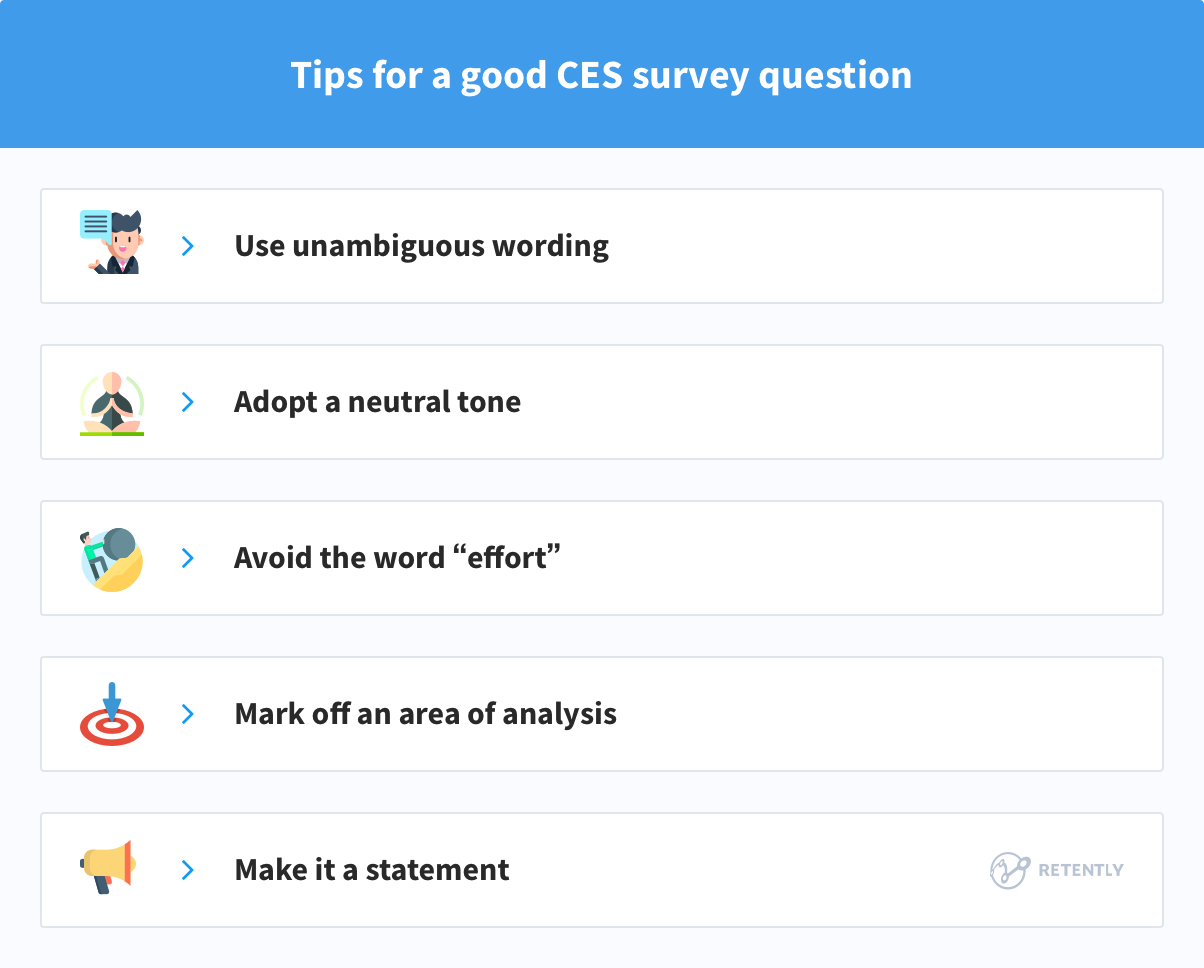

For starters, make sure the wording is as unambiguous as possible. Don’t ask customers about anything that doesn’t have to do with customer effort. Also, the tone of your question should be neutral so that the respondent doesn’t feel like you’re trying to favor a particular answer.

Ideally, you should also avoid using the word “effort” – ironic, we know. That’s because the word’s meanings can differ from language to language, so there’s a chance you might get irrelevant answers.

And make sure your CES survey question marks off an area of analysis – be it the overall experience a customer had with your website/brand, or just a singular customer interaction moment (like live chat).

Lastly, there are two ways to format the question:

- Make it a statement – This format is handy when using the 1-7 Likert scale. Here’s a Customer Effort Score question example of that: “How much do you agree with the following statement: The company’s website makes buying items easy for me.”

- Make it a direct question – This format is more suited to surveys that use the 1-10 and Happy/Unhappy face metrics. Here’s an example: “How much effort did it take to solve your problem?”/”How difficult was it for you to solve your problem?”

We personally recommend using the statement format – both because the 1-7 Likert scale is more accurate to work with when calculating your CES score, and because the direct question format usually relies on using the word “effort,” which we already mentioned can be a bit problematic if you have an international client base. If that’s not a concern, though, the direct question format can work well too.

How to Interpret Customer Effort Score Results

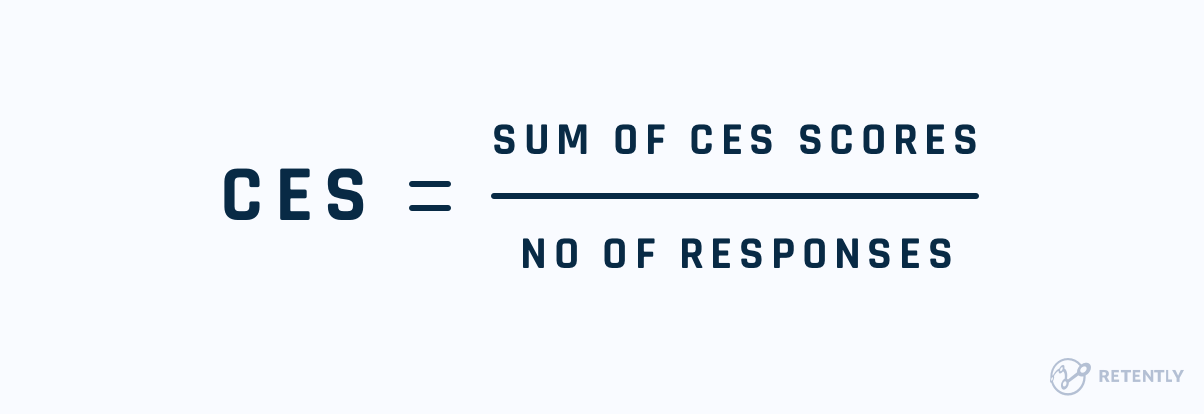

One of the easiest ways to measure CES results is to get an average score (X out of 10). This is generally done with the 1-10 Customer Effort Score scale. Simply take the total sum of your CES scores and divide it by the number of responses you have received.

So, if 100 people responded to your Customer Effort Score survey, and the total sum of their scores amounts to 700, that means your CES score is 7 (out of 10).

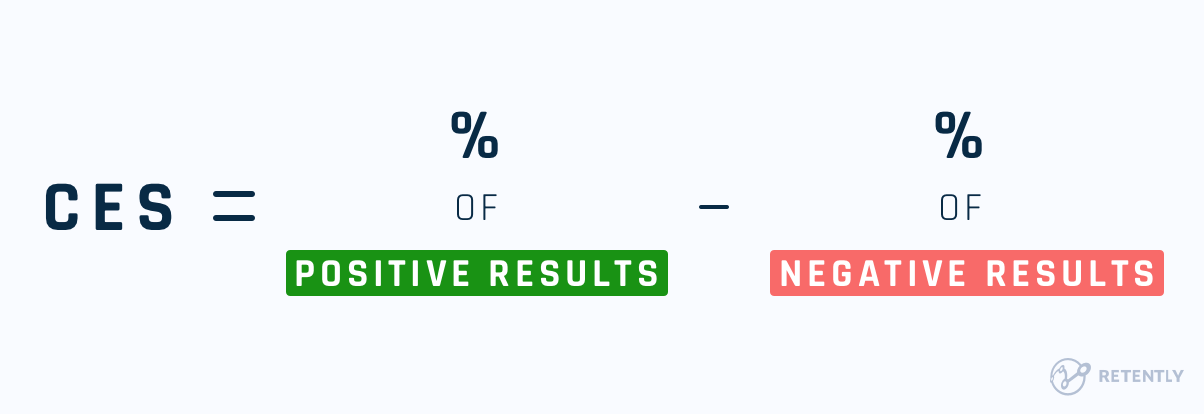

If you’re using other metrics (like Happy/Unhappy faces or an Agree/Disagree scale), you could also try performing a Customer Effort Score calculation by subtracting the percentage of people who responded positively from the percentage of respondents who offered a negative response. The neutral responses are normally ignored.

For instance, let’s say you had 400 respondents; 250 of them responded positively and the rest negatively. By subtracting 37.5% negative answers (150/400 x 100) from the 62.5% positive answers, you get a CES score of 25%.

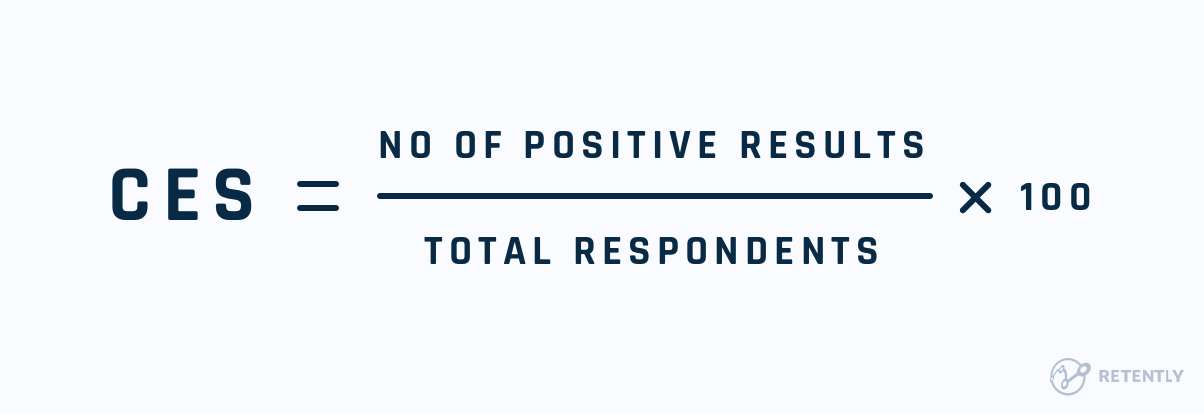

If you’re using the 1-7 Disagree/Agree scale, we also found it’s best to divide the total number of people who offered a 5-7 response (Somewhat Agree – Agree – Strongly Agree) by the total number of respondents. Afterward, multiply the result by 10 or 100 (depending on whether you use a 1-10 or 1-100 scale). You could do the same with the 1-5 scale (with 4 and 5 being the positive responses).

Here’s an example – if you had 100 respondents and 70 of them offered a positive response, your CES score will either be 7 or 70 (70/100 x 10/100).

What Is a Good Customer Effort Score?

The answer is a bit tricky – mostly because performing a Customer Effort Score benchmark against competitors is difficult since there is no clear industry-wide standard to compare against, and also because whether or not your CES score is a good one depends on your Customer Effort Score question and the metrics you use.

After all, if you use the Disagree/Agree scale for answers, where “Strongly Disagree” is numbered with 1 and “Strongly Agree” is numbered with 7, and have a statement like “The company made it easy for me to solve my problem,” you’ll clearly want to have a high CES score – ideally, one that’s over 5/50.

On the other hand, if your CES survey response scale associates 1/Happy Face metrics with “Less Effort” and 10/Unhappy Face metrics with “A Lot of Effort”, and directly asks customers how much effort they had to put into performing a certain action, you should strive to have a low CES score.

As in the case of other customer satisfaction scores, in order to get a grasp of where you stand, you should compare the CES with your score over a specific period of time, to see if your efforts are paying off. If you are experiencing an increase, it means you are on the right track; otherwise, you should dig deep into customer feedback to see what you are missing.

Customer Effort Score – The Good and the Bad

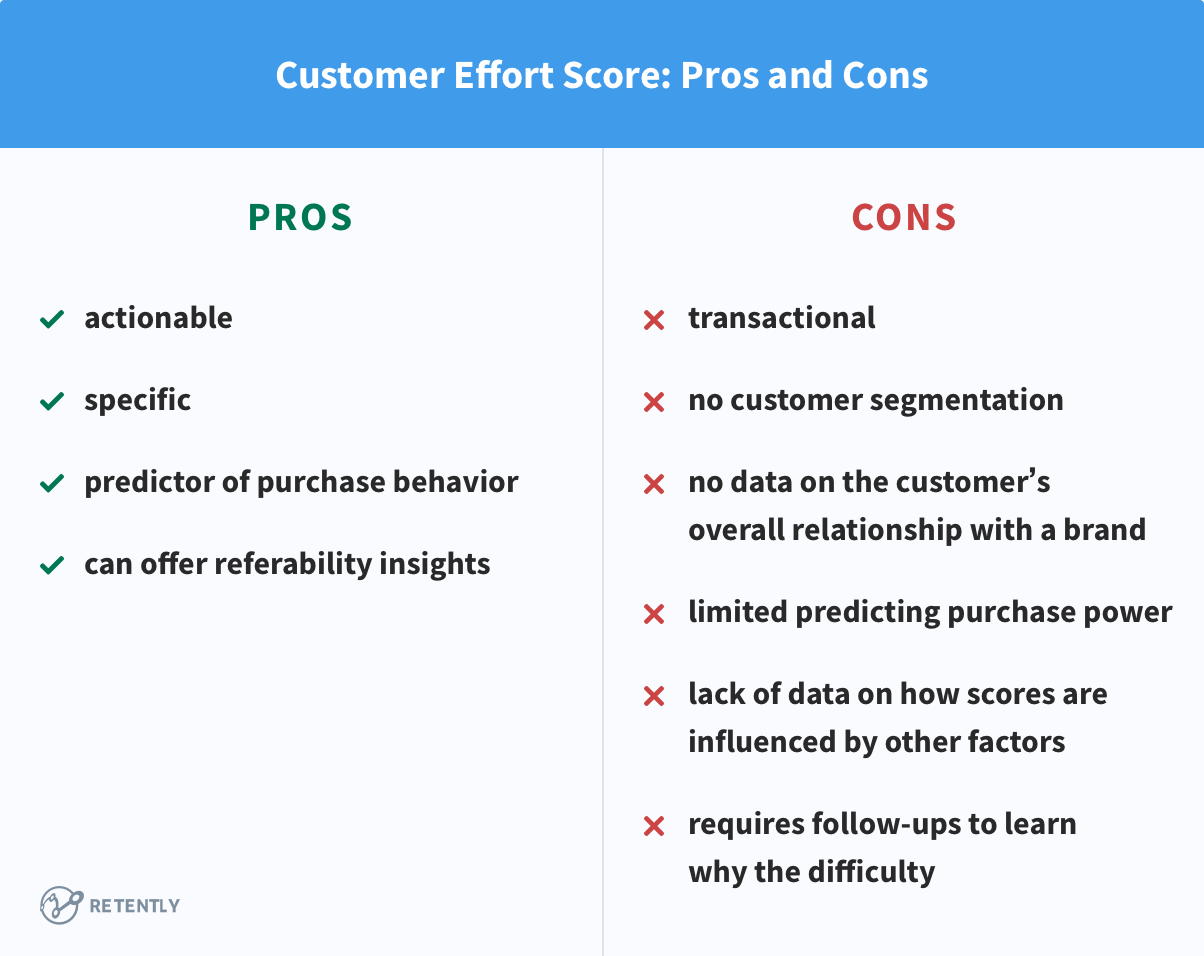

CES has both good and bad sides, but let’s see if the cons pale compared to the pros of using such a survey.

Advantages

One of the great things about CES surveys is that they are actionable and specific – they can quickly show which areas need improvement to streamline the customer experience.

Besides that, Customer Effort Score results have been found to be a strong predictor of future purchase behavior. In fact, according to this HBR’s research, approximately 94% of customers who reported they experienced “low effort” in interactions with a business said they would buy again from it. Also, 88% of those consumers said they would spend more money too.

The same research also shows us that CES can give you an idea of how likely your customers are to refer your brand to others, and how they would speak of it. Basically, around 81% of customers who reported they put in a lot of effort when interacting with a business said they intended to speak negatively of the brand in question. So, it’s possible to assume that consumers who are happy with the low level of effort asked of them will likely recommend the brand to others or, at the very least, speak positively of it.

Disadvantages

While CES drawbacks aren’t really a deal-breaker, they are worth highlighting. For one, the Customer Effort Score can’t really tell you what kind of relationship a consumer has with your brand in general. A low effort score can improve customer satisfaction levels, but it does not necessarily point to loyalty toward a brand. Also, CES can’t tell you how your customers and their ratings are influenced by factors such as your competitors, products, and pricing.

Another issue worth mentioning is the fact that CES surveys don’t offer a way to segment customers by type. While the Customer Effort Score surveys have good predicting purchase power, this is limited to only a specific group of customers who, for example, interact with the support team or go through your self-service options. Since CES are transactional in nature, they focus only on specific interactions and, therefore – a limited group of users.

As one-off surveys, CES offers data with short-term relevancy. Hence they must be triggered right after and wherever the said interaction or transaction takes place (be it email, in-app or chat), so that the feedback is tied to context.

And lastly, CES surveys can tell you a customer had difficulty solving a problem, but they don’t tell you why. For example, if a consumer says it was hard to try and get something your brand can’t actually offer, that’s not a relevant result for your business.

Actionable Insights: What CES Scores Are Really Telling You

Measuring CES is great, but the real value comes from understanding what’s behind the numbers. A low CES score doesn’t just mean customers had a hard time – it tells you exactly where the friction is happening and what you can do about it.

By breaking CES down into actionable themes, you can pinpoint the real problem areas and build a strategy to make customer interactions smoother, faster, and more enjoyable.

1. Process Friction: What’s Slowing Customers Down?

Sometimes, the issue isn’t what customers are trying to do – it’s how they have to do it. If customers consistently report high effort, it’s a sign that something in the process is slowing them down.

Signs of Process Friction:

- Customers take too many steps to complete a simple action (e.g., checkout, returns, sign-ups).

- There are unexpected roadblocks, like mandatory account creation before making a purchase.

- Forms or workflows are too long, confusing, or repetitive.

How to Fix It:

✅ Streamline steps – reduce unnecessary clicks, auto-fill forms, and remove redundant steps.

✅ Optimize for speed – improve website load times and make sure mobile experiences are just as smooth as desktop ones.

✅ Test your own processes – walk through your customer journeys like a first-time user and find pain points firsthand.

2. Communication Gaps: Are Customers Getting the Right Information?

Customers don’t like feeling lost. If they can’t easily find answers or if instructions are unclear, they’ll feel like they have to work too hard to get what they need.

Signs of Communication Gaps:

- Customers ask the same support questions repeatedly, suggesting FAQs or help docs aren’t effective.

- There’s inconsistent messaging across channels (e.g., website says one thing, customer support says another).

- Instructions are too vague or technical, making it hard for non-experts to follow.

How to Fix It:

✅ Clarify instructions – rewrite FAQs, tooltips, and support content in simple, direct language.

✅ Align messaging across teams – make sure customer support, marketing, and product teams are all on the same page.

✅ Proactively provide information – send clear post-purchase or onboarding emails to guide customers before they hit friction.

3. Empowerment Issues: Are Customers Too Dependent on Support?

If customers frequently reach out for help, it’s a red flag that they don’t feel empowered to solve issues on their own.

Signs of Empowerment Issues:

- High volume of repeat support tickets for the same problem.

- Customers can’t complete basic tasks without assistance (e.g., changing account settings, tracking orders).

- Your self-service options (like chatbots or help centers) aren’t being used or aren’t helpful.

How to Fix It:

✅ Improve self-service resources – make sure help articles, chatbots, and FAQs are easy to find and actually useful.

✅ Enhance UI/UX – sometimes the issue isn’t the process, but how it’s presented. A more intuitive design can eliminate confusion.

✅ Educate customers proactively – consider short video tutorials, walkthroughs, or onboarding emails to help customers navigate your product without needing support.

The Effort Action Plan: Reducing Friction, One Step at a Time

Fixing high-effort experiences isn’t just about tweaking one thing – it’s about creating a system for continuous improvement. That’s where an Effort Action Plan is needed.

Here’s how to build one:

✅ Identify Effort Hotspots: Look at CES scores by touchpoint (onboarding, checkout, support, etc.) to see where customers struggle most.

✅ Categorize the Issues: Determine whether the problem is process friction, communication gaps, or an empowerment issue.

✅ Prioritize Fixes: Start with high-impact areas (e.g., if checkout is a major pain point, focus there first).

✅ Implement and Test Changes: Reduce unnecessary steps, clarify messaging, or improve self-service options – then track CES scores over time to measure improvement.

✅ Make It a Habit: Keep measuring and refining to ensure effort stays low as your business grows.

If customers tell you something was hard, believe them – and take action. CES gives you the blueprint for removing friction, helping you turn frustrating moments into effortless experiences that keep customers happy and loyal.

Is Only Using Customer Effort Score Surveys Enough?

While CES app surveys are a great source of customer insight, it’s better when you pair them with a satisfaction-oriented survey – like Net Promoter Score, for example.

For the sake of this article, we’ll throw in a quick definition: NPS is a customer satisfaction survey that asks consumers how likely they are (on a scale from 0 to 10) to recommend your brand to other people. NPS surveys allow you to send follow-up questions to ask why the customer gave a particular rating, essentially letting you find out what exactly you need to improve to boost customer loyalty.

Using CES alongside NPS will let you accurately measure both consumer effort and loyalty. The two metrics effectively complement each other and allow you to focus on two vital aspects of your business instead of just one – especially since NPS lets you segment customers. Moreover, it seems that top-performing low-effort companies tend to have an NPS that is 65 points higher than top-performing high-effort businesses, further showing the link between Customer Effort Score and Net Promoter Score.

Bottom line

CES is one more transactional instrument in your toolbox that can help you pinpoint weaknesses across service interactions and a product’s ease of use. Since customer experience expectations are ever-evolving, consistently keeping an eye on effort scores, overall customer satisfaction and trends in the data is already a necessity. However, more data isn’t necessarily better data. The metrics have value only when the respective feedback is converted into follow-up actions and product improvements.

Whether you are looking for a single customer satisfaction metric or a more complex approach, Retently got you covered. You can have all your data – NPS, CSAT, CES – under one roof with insightful analytics helping you sift through the conglomerate of feedback. Sign up for your free trial to see for yourself how easy it is to get started.

Alex Bitca

Alex Bitca

Greg Raileanu

Greg Raileanu

Christina Sol

Christina Sol